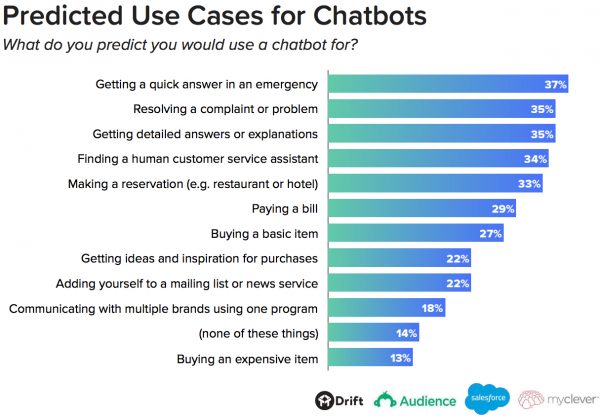

Chatbots are all the rage right now and are popping up everywhere. This can mean buying movie tickets in under a minute or getting instant answers to routine questions.

Like any new technology, the massive upside comes with added complexity and security risks that need to be evaluated. With the right implementation, chatbots can be a secure and safe way to interact with customers.

Chatbots are everywhere

It’s not just your imagination, chatbots are all over the place. In fact, 80% of companies plan to have some form of chatbot by 2020. This can range from customers being able to contact a chatbot via a company’s website to integrating chatbots directly with popular messaging platforms such as Messenger, WhatsApp or Telegram.

Chatbot interactions can be simple queries about store hours, whether a product is in stock or more complex processes such as handling refunds, purchases and customer complaints. When managed properly, this is a boon both for customers and merchants as it allows customers to resolve many simple issues faster than it would take to deal with traditional customer service. This frees up customer support personnel to handle more complex tasks, cuts costs and increases customer satisfaction.

eTeam has put together an entire report on chatbots and other emerging tech trends for retail and e-commerce: get the report.

Protecting data

While many chats may seem innocuous at first glance, protecting the privacy of conversations with chatbots has to be a top priority for enterprises that use them. Even requests such as ‘where is the nearest store?’ contain personal data that need to be protected from inadvertently falling into the hands of third parties. The risk only grows as transaction data, payment information and even medical records are shared with chatbots.

For the most part, chatbots don’t present security issues that haven’t already been hashed out. Thankfully, best practices are in place on any mature framework such as Ruby on Rails or Laravel that already provide engineers with the tools they need to make sure that data is securely transmitted and safely stored.

Using industry standard authentication methods for chatbots that handle customer service within an account, i.e. returns, transactions, etc. is the obvious and most prudent step to take. In most cases, the chatbot is not the weakest link, and a direct attack on encrypted chats and databases is rare. The most common attack vector for chatbots is weak authentication. Thus it’s imperative to encourage clients to use strong passwords, two-factor authentication and to be aware of phishing attempts.

Social engineering

Chatbots have the potential to protect both consumers and merchants from social engineering attacks, which are on the rise. In its worst form, a scam artist convinces a customer service representative to divulge confidential data such as a credit card number by posing as customer. In cases where human judgement comes into play, social engineering will always be a risk factor. Removing the possibility of contacting a person who is capable of sharing sensitive account data is a massive step forward in security.

Jedi mind tricks don’t work on chatbacks. No matter what sort of story a scammer has concocted, a bot isn’t going to fall for it. This provides a level of security beyond what a traditional customer service rep would provide.

Secure APIs make secure chatbots

The same steps that developers need to take to secure any site are even more important when it comes to chatbots. In concrete terms, the APIs that bots rely on must be free of common vulnerabilities that would leave them open to hackers.

Cross site scripting is a serious threat to chatbots and can result in personal information being stolen or even a malicious bot posing as an authentic company communication portal. This isn’t a problem of chatbots per se, it’s an issue of unsecured APIs. That’s why every chatbot that eTeam builds is scanned with Sapience to detect any vulnerabilities as part of our comprehensive security testing.

Once all the APIs a chatbot uses have been secured, the best practices of a mature development framework have been followed and clients are encouraged to use secure login practices, the vast majority of possible ways a hacker could exploit a chatbot are closed.

Security testing goes beyond just scanning APIs with a tool like Sapience. We always perform comprehensive UX testing and place specific emphasis on manual testing. A well designed user experience prevents phishing, encourages security best practices and reassures clients that chatbots are part of a secure online interaction with a business.

Chatbots are the future

Chatbots don’t have to be limited to retail, either. We’ve built a chatbot for Taskware, a product that makes it fast and easy to coordinate work between developers and large groups of people working on microtasks. The chatbot makes it easier for microtask workers to communicate with the interface while all of the data gets transferred to an API that is simple for developers to access. This is the future of human-computer interactions.

There’s no sense in avoiding chatbots out of unfounded security fears as bots can be a great way to improve customer satisfaction. Afterall, who doesn’t relish solving routine customer service issues instantly instead of a long chain emails or days of phone tag? Besides improving the overall customer experience, chatbots save resources for businesses and allow a shop to serve more customers at once.

With the right security testing and a user experience designed to gently educate consumers about online safety, chatbots are a safe way to conduct business for retailers.